While we can talk all day about how great LEADTOOLS is, it's always meaningful to hear a review from an outside developer. Today, we're sharing with you a review of our LEADTOOLS OCR Module - LEAD Engine written by Jeffrey T. Fritz

I live in an older house that loves to bother me with the joys of home ownership and maintenance. Plumbing issues, strange electrical installation choices, and a 30-year-old furnace that burns oil. At first, the oil burner wasn’t a big deal because this meant I wasn’t paying for an electric heater. However, it did mean that my basement had a huge oil tank hiding in it that would need to be refilled on a regular basis during the cold winters. That shouldn’t be a problem... but I would never know the level of the oil in the tank unless I wandered down to the basement on a regular basis and checked the level by reading an old gauge mounted on the top of the tank.

As a technologist, this daily task of reading the oil tank gauge became tiresome and I had no way to know how quickly I was burning oil. What is the correlation between average daily temperature and the amount of oil my furnace burns? I wanted to solve the problem and started with a pad of paper and wrote down the daily readings. BORING! Who wants to read hard copy? I want this data on my phone, and I want to be collected without my daily trip to the basement to read the meter.

My Proposed Solution

I had been tinkering with a Raspberry Pi with a camera for some time, without any real problem to solve. After paying my oil bill for the coming year, it hit me that I could use the Pi and camera setup to mount a camera to take a picture of the oil tank gauge on a regular basis. I would write some code to take the picture and upload it to an Azure queue where it could be analyzed and give me a dataset of the oil level of the tank. I knew how to trigger the Pi to take a picture and upload the image, I just needed the secret sauce to analyze that image of the gauge to determine the current reading.

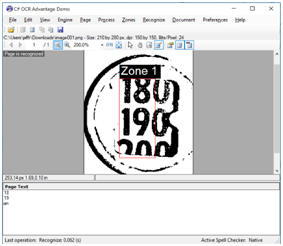

Figure 1 - One of my test snapshots of the oil tank gauge

I explored several OCR solutions, testing them with a few photos I snapped of the gauge using my phone. This seemed like a simple test, I have an easy to read number in the middle that needs to be identified. In fact, as you can see from my sample image in figure 1, I just need to read the left-most two digits because the last digit is always a zero. In this case, detecting the 19 always implies the zero at the end and I can discern that approximately 190 gallons are in the tank.

The solution that was most accurate and started working for me, a relative newbie with OCR technologies, was the LEADTOOLS OCR Advantage Module. Let’s take a look at some of the code I wrote for an Azure Web Job that would read the image from an Azure queue, process it, and store the output for later use.

But first... some testing

I knew what I wanted to do with my image, but I needed to visualize the solution. As a developer who primarily works with web pages and databases, analyzing an image like this is a foreign topic to me. Fortunately, the LEADTOOLS installation makes a whole bunch of demo code available including the OcrAdvantageDemo Windows Forms application. This demo allowed me to load my image and test out the steps I wanted to automate to process my image. I emailed the LEADTOOLS customer service folks asking for some tips and shared my sample image that I was going to work on. They were simply great, writing back in a few hours with details about how they would process the image with the OcrAdvantageDemo and pointed me to the sample code I would need. I took their advice, loaded up this demo and started tinkering with my sample image.

I cropped it, I made it black and white, and used the Autozone tool to attempt to identify areas where there was text. Unfortunately, the quality of my image made it difficult for the autozone feature to accurately locate only the items in the gauge window. I shifted tactics and I used the zone selection tool to draw a box around those first two digits on my gauge as shown in figure 2. The demo has a very simple "Recognize" function that I used to determine what it found in the zone I created. As you can see from figure 2, I hit the jackpot as it detected the digits "18" and "19". The final reading of "an" works for me, as its not the value that I‘m looking for but does identify that there is a third value peeking into the bottom of the gauge. With three values appearing in the image, I can assume that the middle value is the current reading. If only two values were identified, I could infer that the current value was somewhere between the two.

Using this demo, I navigated to the Zones -> Update Zones menu item and grabbed the coordinates for the box I drew:

- Left: 71

- Top: 66

- Width: 61

- Height: 145

I have what I need, now I can write some code.

Writing the image recognition function

I broke up the recognition steps of the image into two functions: Preprocess and ReadGauge to address the two steps that I took with the OcrAdvantageDemo. In the Preprocess method, I embedded calls to the Crop, Autolevel, and AutoBinarize commands to trim the image and convert it to black-and-white format for processing:

private static RasterImage PreProcessImage(Stream inputStream)

{

Rasterimage img;

// Load the image

using (var codecs = new RasterCodecs())

img = codecs.Load(inputStream);

// Crop the image

var croppedImg = new AutoCropCommand();

croppedImg.Run(img);

// enhance the colors in the image to compensate for light

var autoColor = new AutoColorLevelCommand();

autoColor.Run(img);

// Convert to black and white

var autoBin = new AutoBinarizeCommand();

autoBin.Run(img);

return img;

}

The initial statement in this function loads up the Codecs that LEADTOOLS will use to read the image. In my case, I am passing a Stream into this method that contains either a JPG or PNG formatted image. The Codecs object will automatically load any Codec DLLs that are available in the folder we distribute our solution to. I have included "Copy Local" references to the Leadtools.Codecs.Png.dll and Leadtools.Codecs.Cmp.dll to handle these file formats.

The LEADTOOLS RasterImage type returned from this method is formatted properly, aligned properly thanks to the crop statement, and ready to be analyzed. I took my notes of where the zone was located on my gauge and copied them into my new ReadGauge method:

private static List<string> ReadGauge(RasterImage img)

{

var foundText = "";

using (var engine = OcrEngineManager.CreateEngine(OcrEngineType.Advantage, false))

{

engine.Startup(null, null, null, null);

using (var page = engine.CreatePage(img, OcrImageSharingMode.None))

{

var numberZone = new OcrZone();

numberZone.Bounds = new Leadtools.Forms.LogicalRectangle(71, 66, 61, 145,

Leadtools.Forms.LogicalUnit.Pixel);

numberZone.CharacterFilters = OcrZoneCharacterFilters.Digit;

page.Zones.Add(numberZone);

page.Recognize(null);

try

{

foundText = page.GetText(0);

} catch (RasterException ex)

{

// no data found

foundText = "<< no data found >>";

}

}

}

return foundText.Split('\n').ToList();

}

Let’s take a look at what’s happening in this function. After initializing a string to receive the text found on the image, the OCR Engine is initialized as an Advantage engine. This is the LEADTOOLS advanced OCR analysis tool for recognizing text in images. It is multi-threaded and takes great advantage of a multi-core CPU, so we had better clean it up after use so that we don’t accidentally keep it around longer than needed. I declared this engine inside of a using statement so that it is properly disposed of at the conclusion of the analysis run.

Next, I start up the engine, recognize the image and instruct it that it will analyze a "Page" that contains the image using the CreatePage method.

The OCR zones are configured next, and in my code I used the zone location that I identified using the OCR Advantage Demo. The autozone feature would work great if this was a PDF document or a photo of a document that I was reading, but this is a dirty gauge in a basement that will always present its text in the same location in my photo. The OcrZone is defined with a Bounds property assigned the coordinates of the rectangle that I captured earlier. I also configured the zone to only identify digits, as all values that should be visible in the gauge are numbers.

Next, the magic happens; I assigned the zone to the OCR Page and trigger the Recognize() method. I could have passed a callback function into the Recognize method if I wanted it to run asynchronously, but in my scenario this method would be running on a server somewhere, and I don’t care how long it runs.

I used a try-catch block around the GetText() method to verify that text is properly found on the page. This version of LEADTOOLS OCR will throw a RasterException if there is no text identified on the page when you attempt to read it. If text was found, I return it as a List of the rows of digits identified.

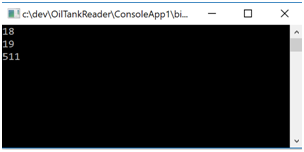

When I run this code and dump the results of the identification to a console window, I get the following output:

Perfect! Analyzing this image was split-second fast, and I now have a very accurate middle value that I can save into my database as a reading of "190" on the tank.

Alternative Design Options

This was a simple interaction with the LEADTOOLS to read data from an uploaded image and save the data. I could have used the same OCR commands and tools in an iPhone application to analyze the photo on my phone directly and save the value to a database, but in my scenario that means that I would need to use my phone to take a picture periodically every day.

I could have written a .NET Standard 2.0 library that uses the LEADTOOLS OCR to do the image analysis directly on the Raspberry Pi and only uploaded the recognized digits. This would have meant no need to perform image processing or storage on the web. I liked this approach but wanted to keep the images available so that I could do further analysis from my final application if I desired.

As I analyze these images further, I can use the GetRecognizedCharacters() method of the OcrPage object to get more information about the text identified. Let’s take a look at what that might look like if I replace the direct call to "GetText()" with:

foundText = CalculateExactVolume(page.GetText(0), page.GetRecognizedCharacters()[0]);

Now we’re getting the recognized characters on the whole page as well as the complete set of information returned by the OCR about those characters. The CalculateExactVolume looks like the following:

private static readonly LogicalRectangle GaugeWindow =

new LogicalRectangle(71, 66, 61, 145, LogicalUnit.Pixel);

private string CalculateExactVolume(string foundText, IOcrZoneCharacters ocrCharacters)

{

// Identify where the lowest number is located in the identified text

var charCount = foundText.IndexOf('\n')-1;

// Grab the raw OCR Number for inspection

var foundCharacter = ocrCharacters.Skip(charCount).Take(1).First();

var digitHeight = foundCharacter.Bounds.Height;

var indicatorPosition = GaugeWindow.Y + GaugeWindow.Height / 2; // indicator arrow is in the middle

var digitBottom = foundCharacter.Bounds.Bottom;

var distanceFromBottom = digitBottom - indicatorPosition;

var adjustment = ((digitHeight - distanceFromBottom)/digitHeight)*10 - 5;

var txt = double.Parse(foundText.Split('\n')[1].Trim() + "0") + adjustment;

return Math.Round(txt,1).ToString();

}

The charCount identifies the ordinal position of the first digit of the second number identified in the window so that the foundCharacter variable can be assigned the raw OCR data about that digit. The next block of three lines does some math with the location of the digit within the rectangle that defines the boundaries of the gauge we processed earlier (GaugeWindow). The indicatorPosition, assumed to be in the middle of the GaugeWindow, is measured against its position in the digit we have identified. When the indicator is in the exact middle of the digit, the rounded (in this case 190) value is returned. We adjust for this so that when the indicator is pointing to the bottom of a digit the digit’s value + 5 is returned (195 if the indicator was pointing at the bottom of our 19). In the case of our image, I can calculate that the volume in the tank is 188.1 as the 190 has started to scroll down past the indicator.

Summary

The simple API of the LEADTOOLS OCR libraries made it easy for me to add their technology into this hobby project of mine. I can now rely on their rich API to deliver accurate locations of the digits in my oil tank’s gauge. I will wrap up my code as an Azure Web Job and configure it to analyze the photos that my Raspberry Pi will capture and upload for me. Check out the complete code with my sample images attached to this article, and catch me on my blog at http://jeffreyfritz.com as I build the Raspberry Pi portion of this analog oil gauge reader.