Implement an AWS S3 Bucket Cache - C# .NET

This tutorial shows how to implement Amazon Web Services (AWS) S3 Bucket Cache with the LEADTOOLS Document Library for use with our HTML5/JS Document Viewer.

| Overview | |

|---|---|

| Summary | This tutorial covers how to use Amazon Web Services (AWS) S3 Bucket Cache in an application that uses the LEADTOOLS Document Library. |

| Completion Time | 30 minutes |

| Platform | .NET Framework |

| IDE | Visual Studio 2022 |

| Runtime License | Download LEADTOOLS |

| Try it in another language |

|

Required Knowledge

This implementation is a fully-fledged example that can be used in a production environment. It makes use of JSON Serialization, which will make it compatible across different LEADTOOLS versions. For more information on the serialization of data, refer to CacheSerializationMode.

Note

This implementation does not handle the expiring of cache files. You will need to configure your AWS S3 implementation properly, if you want the files to be managed and deleted after a certain "expiring" time frame has passed.

Before any functionality from the SDK can be leveraged, a valid runtime license will have to be set.

For instructions on how to obtain a runtime license refer to Obtaining a License.

Open the Project

The functionality in this tutorial is built on the functionality of the .NET Document Service and JS Document Viewer. In order to open the project, navigate to <INSTALL_DIR>\Examples\Viewers\JS\DocumentViewerDemo and open the DocumentViewerDemo.sln solution file.

The Document Service projects are set up to run alongside many of our HTML5/JS Demos out of box (i.e. DocumentViewerDemo). For a step by step on how to configure and run the .NET Framework Document Service, you can take a look into the Configure and Run the Document Service tutorial.

Open the Document Viewer and Document Service Project

DocumentViewerDemo.sln is the solution file to open the Document Service.

The Document Service will launch on the following URL and port http://localhost:40000. The JS Document Viewer will launch on the following URL and port https://localhost:20010.

Set the License File

The License unlocks the features needed for the project. It must be set before any toolkit function is called. For details, including tutorials for different platforms, refer to the Setting a Runtime License tutorial.

There are two types of runtime licenses:

- Evaluation license, obtained at the time the evaluation toolkit is downloaded. It allows the toolkit to be evaluated.

- Deployment license. If you need a Deployment license file and developer key, refer to Obtaining a License.

Configure the Project

This example code requires the following NuGet package:

AWSSDK.S3

Create and Implement the S3BucketCache Class

In the Solution Explorer, right-click Class1.cs and select Rename from the context menu. Type S3BucketCache.cs and press Enter.

Open the S3BucketCache.cs file and add the below code to create the S3BucketCache class.

using System;using System.Collections.Generic;using System.IO;using Newtonsoft.Json;using Amazon.S3;using Amazon.S3.IO;using Amazon.S3.Model;using Leadtools.Caching;namespace Leadtools.Services.Tools.Cache{/// <summary>/// Wraps an AWS S3 AmazonS3Client object to be used with the LEADTOOLS Document Library/// </summary>public class S3BucketCache: ObjectCache{private S3BucketCache() { }//S3Client used to connect to S3 InstanceAmazonS3Client _client;//Name of the bucket holding all cache entriespublic string _bucketName;// <summary>// Initialized a LEADTOOLS Object Cache wrapper from a AWS S3 Client// </summary>// <param name="client">Fully-initialized AmazonS3DClient object ready to be used.</param>// <param name="bucketName">Name of the bucket used for the cache in your S3 implementation.</param>// </summary>public S3BucketCache(AmazonS3Client client, string bucketName = "LeadBucket"){this._client = client;//If you do not pass a bucketName, then "leadbucketcache" will be the default bucketname that will be used.this._bucketName = bucketName;}//// These members must be implemented by our class and are called by the Document toolkit//// Name of the Cachepublic override string Name{get{return "S3BucketCache";}}//Use JSON serialization for our policy and data filespublic override CacheSerializationMode PolicySerializationMode{get{return CacheSerializationMode.Json;}set{throw new NotSupportedException();}}public override CacheSerializationMode DataSerializationMode{get{return CacheSerializationMode.Json;}set{throw new NotSupportedException();}}// We have no special extra supportpublic override DefaultCacheCapabilities DefaultCacheCapabilities{get{return DefaultCacheCapabilities.None;}}// Method called when a cache item is added.// Must return the old valuepublic override CacheItem<T> AddOrGetExisting<T>(CacheItem<T> item, CacheItemPolicy policy){if (item == null)throw new ArgumentNullException("item");// Resolve the key, remember, we do not have regionsvar resolvedKey = ResolveKey(item.RegionName, item.Key);CacheItem<T> oldItem = null;// Try to get the old valueS3FileInfo file = new S3FileInfo(_client, _bucketName, resolvedKey);if (file.Exists){var oldValue = GetFromCache<T>(item.RegionName, item.Key);oldItem = new CacheItem<T>(item.Key, (T)oldValue, item.RegionName);}// Set the new dataAddToCache(item, policy);// Return old itemreturn oldItem;}public override CacheItem<T> GetCacheItem<T>(string key, string regionName){// If we have an item with this key, return it. Otherwise, return nullvar resolvedKey = ResolveKey(regionName, key);CacheItem<T> item = null;S3FileInfo file = new S3FileInfo(_client, _bucketName, resolvedKey);if (file.Exists){var itemValue = GetFromCache<T>(regionName, key);item = new CacheItem<T>(key, (T)itemValue, regionName);}return item;}// Check if they key is in the S3 bucketpublic override bool Contains(string key, string regionName){var resolvedKey = ResolveKey(regionName, key);S3FileInfo fileInfo = new S3FileInfo(_client, _bucketName, resolvedKey);return fileInfo.Exists;}public override bool UpdateCacheItem<T>(CacheItem<T> item){// Update the itemif (item == null)throw new ArgumentNullException("item");var resolvedKey = ResolveKey(item.RegionName, item.Key);var exists = Contains(item.Key, item.RegionName);if (exists)AddToCache(item, null);return exists;}public override T Remove<T>(string key, string regionName){// Removed if exists, return old valueT existingValue = default(T);var resolvedKey = ResolveKey(regionName, key);if(Contains(key, regionName)){existingValue = (T)GetFromCache<T>(regionName, key);}DeleteItem(key, regionName);return existingValue;}public override void DeleteItem(string key, string regionName){var resolvedKey = ResolveKey(regionName, key);//Remove if exists_client.DeleteObject(_bucketName, resolvedKey);}private static string ResolveKey(string regionName, string key){// Both must me non-empty stringsif (string.IsNullOrEmpty(regionName)) throw new InvalidOperationException("Region name must be a none empty string");if (string.IsNullOrEmpty(key)) throw new InvalidOperationException("Region key name must be a none empty string");// We are a simple dictionary with no grouping. regionName might not be unique, key might not be unique, but combine them// and we are guaranteed a unique keyreturn regionName + "/" +regionName + "-" + key;}private void AddToCache<T>(CacheItem<T> item, CacheItemPolicy policy){// JSON serialize itvar json = JsonConvert.SerializeObject(item.Value);// If the sliding expiration is used, make it the absolute valueTimeSpan? expiry = null;if (policy != null){var expiryDate = policy.AbsoluteExpiration;if (policy.SlidingExpiration > TimeSpan.Zero){expiryDate = DateTime.UtcNow.Add(policy.SlidingExpiration);}var br = expiryDate;// Now, we have a date, convert it to time span from now (all UTC)expiry = (TimeSpan)expiryDate.Subtract(DateTime.UtcNow);}var resolvedKey = ResolveKey(item.RegionName, item.Key);PutObjectRequest request = new PutObjectRequest{BucketName = _bucketName,Key = resolvedKey,};using(MemoryStream stream = new MemoryStream()){using(StreamWriter writer = new StreamWriter(stream)){writer.Write(json);writer.Flush();stream.Position = 0;request.InputStream = stream;_client.PutObject(request);}}}private object GetFromCache<T>(string regionName, string key){var typeOfT = typeof(T);object result = null;var resolvedKey = ResolveKey(regionName, key);var response = _client.GetObject(_bucketName, resolvedKey);using (var reader = new StreamReader(response.ResponseStream)){var fileContents = reader.ReadToEnd();result = JsonConvert.DeserializeObject<T>(fileContents);}return result;}public override void UpdatePolicy(string key, CacheItemPolicy policy, string regionName){// Nothing to do}//// These members must be over implemented by our class but are never called by the Documents toolkit// So just throw a not supported exception//// This is for default region support. We do not have thatpublic override object this[string key]{get{throw new NotSupportedException();}set{throw new NotSupportedException();}}// Delete a region in one shot. We do not support that// Note: This is only called if we have DefaultCacheCapabilities.CacheRegions. Since we do not, the caller is responsible for// calling DeleteAll passing all the items of the region (which in turn will call DeleteItem for each)public override void DeleteRegion(string regionName){throw new NotSupportedException();}// Begin adding an external resource. We do not support that// Note: This is only called if we have DefaultCacheCapabilities.ExternalResourcespublic override Uri BeginAddExternalResource(string key, string regionName, bool readWrite){throw new NotSupportedException();}// End adding an external resource. We do not support that// Note: This is only called if we have DefaultCacheCapabilities.ExternalResourcespublic override void EndAddExternalResource<T>(bool commit, string key, T value, CacheItemPolicy policy, string regionName){throw new NotSupportedException();}// Get the item external resource. We do not support that// Note: This is only called if we have DefaultCacheCapabilities.ExternalResourcespublic override Uri GetItemExternalResource(string key, string regionName, bool readWrite){throw new NotSupportedException();}// Remove the item external resource. We do not support that// Note: This is only called if we have DefaultCacheCapabilities.ExternalResourcespublic override void RemoveItemExternalResource(string key, string regionName){throw new NotSupportedException();}// Get the item virtual directory path. We do not support that// Note: This is only called if we have DefaultCacheCapabilities.VirtualDirectorypublic override Uri GetItemVirtualDirectoryUrl(string key, string regionName){throw new NotSupportedException();}// Getting number of items in the cache. We do not support thatpublic override long GetCount(string regionName){throw new NotSupportedException();}// Statistics. We do not support thatpublic override CacheStatistics GetStatistics(){throw new NotSupportedException();}// Statistics. We do not support thatpublic override CacheStatistics GetStatistics(string key, string regionName){throw new NotSupportedException();}// Getting all the values. We do not support thatpublic override IDictionary<string, object> GetValues(IEnumerable<string> keys, string regionName){throw new NotSupportedException();}// Enumeration of the items. We do not support thatprotected override IEnumerator<KeyValuePair<string, object>> GetEnumerator(){throw new NotSupportedException();}// Enumeration of the keys. We do not support thatpublic override void EnumerateKeys(string region, EnumerateCacheEntriesCallback callback){throw new NotSupportedException();}// Enumeration of regions. We do not support thatpublic override void EnumerateRegions(EnumerateCacheEntriesCallback callback){throw new NotSupportedException();}}}

Create the Cache Manager

Unlike the offline Document Viewer, the Document Service needs a cache manager to implement a cache.

In the Solution Explorer, right-click DocumentService project file, and select Add -> New Item. Create a new Class. Name your new class S3BucketCache.cs and press Add.

Open the S3BucketCache.cs file and add the below code to create the S3BucketCache class.

using System;using System.Collections.Generic;using System.Diagnostics;using System.Xml.Linq;using Amazon;using Amazon.S3;using Leadtools.Caching;using Leadtools.Document;using Leadtools.Services.Tools.Helpers;namespace Leadtools.Services.Tools.Cache{public class S3CacheManager : ICacheManager{// Our cacheprivate ObjectCache _objectCache;private CacheItemPolicy _cacheItemPolicy;private bool _isInitialized = false;public string WebRootPath { get; set; }// Its namepublic const string CACHE_NAME = "S3BucketCache";public S3CacheManager(string WebRootPath, XElement cacheManagerElement){if (string.IsNullOrEmpty(WebRootPath))throw new ArgumentNullException(nameof(WebRootPath));_isInitialized = false;this.WebRootPath = WebRootPath;// Get the values from the XML documentParseXml(cacheManagerElement);}public void ParseXml(XElement cacheManagerElement){// Nothing to parse}private const string CACHE_MANAGER_NAME = "S3CacheManger";public string Name{get { return CACHE_MANAGER_NAME; }}public string[] GetCacheNames(){return new string[] { CACHE_NAME };}public bool IsInitialized{get { return _isInitialized; }}public void Initialize(){Trace.WriteLine("Initializing default cache from configuration");if (_isInitialized)throw new InvalidOperationException("Cache already initialized");_objectCache = InitializeS3BucketCache();_objectCache.SetName(CACHE_NAME);if (_objectCache.Name != CACHE_NAME)throw new InvalidOperationException($"ObjectCache implementation {_objectCache.GetType().FullName} does not override SetName");DocumentFactory.LoadDocumentFromCache += LoadDocumentFromCacheHandler;_cacheItemPolicy = InitializePolicy(_objectCache);_isInitialized = true;}private void LoadDocumentFromCacheHandler(object sender, ResolveDocumentEventArgs e){// Get the cache for the document if we have itObjectCache objectCache = GetCacheForDocument(e.LoadFromCacheOptions.DocumentId);if (objectCache != null)e.LoadFromCacheOptions.Cache = objectCache;}public void Cleanup(){if (!_isInitialized)return;_objectCache = null;_cacheItemPolicy = null;_isInitialized = false;}private ObjectCache InitializeS3BucketCache(){// Called by InitializeService the first time the service is run// Initialize the global Cache objectstring cacheConfigFile = ServiceHelper.GetSettingValue(ServiceHelper.Key_Cache_ConfigFile);cacheConfigFile = ServiceHelper.GetAbsolutePath(cacheConfigFile);if (string.IsNullOrEmpty(cacheConfigFile))throw new InvalidOperationException($"The cache configuration file location in '{ServiceHelper.Key_Cache_ConfigFile}' in the configuration file is empty");ObjectCache objectCache = null;// Set the base directory of the cache (for resolving any relative paths) to this project's pathvar additional = new Dictionary<string, string>();additional.Add(ObjectCache.BASE_DIRECTORY_KEY, WebRootPath);try{//TODO Setup Connectionsstring awsAccessKeyId = "AWS_ACCESS_KEY"; //Update with Access Keystring awsSecretAccessKey = "AWS_SECRET_ACCESS_KEY"; //Update with Secret Access KeyRegionEndpoint region = RegionEndpoint.USEast1; //Update to match a region where your S3 buckets are accessible.AmazonS3Client client = new AmazonS3Client(awsAccessKeyId, awsSecretAccessKey, region);string bucketName = "leadtoolstestbucket";objectCache = new S3BucketCache(client, bucketName);}catch (Exception ex){throw new InvalidOperationException($"Cannot load cache configuration from '{cacheConfigFile}'", ex);}return objectCache;}public static CacheItemPolicy InitializePolicy(ObjectCache objectCache){var policy = new CacheItemPolicy();return policy;}private static void VerifyCacheName(string cacheName, bool allowNull){if (cacheName == null && allowNull)return;if (CACHE_NAME != cacheName)throw new ArgumentException($"Invalid cache name: {cacheName}", nameof(cacheName));}public void CheckCacheAccess(string cacheName){Console.WriteLine("Made it to check!");CacheItemPolicy policy = null;}public CacheItemPolicy CreatePolicy(string cacheName){VerifyCacheName(cacheName, false);return _cacheItemPolicy.Clone();}public CacheItemPolicy CreatePolicy(ObjectCache objectCache){// Get the name of this cachestring cacheName = GetCacheName(objectCache);if (cacheName == null)throw new InvalidOperationException("Invalid object cache");return CreatePolicy(cacheName);}public CacheStatistics GetCacheStatistics(string cacheName){return null;}public void RemoveExpiredItems(string cacheName){VerifyCacheName(cacheName, true);// Only supported by FileCacheFileCache fileCache = _objectCache as FileCache;if (fileCache != null){fileCache.CheckPolicies();}}public ObjectCache DefaultCache{get { return _objectCache; }}public ObjectCache GetCacheByName(string cacheName){VerifyCacheName(cacheName, false);return _objectCache;}public string GetCacheName(ObjectCache objectCache){if (objectCache == null)throw new ArgumentNullException(nameof(objectCache));if (objectCache == _objectCache)return CACHE_NAME;return null;}public ObjectCache GetCacheForDocument(string documentId){if (documentId == null)throw new ArgumentNullException(nameof(documentId));return _objectCache;}public ObjectCache GetCacheForDocumentOrDefault(string documentId){ObjectCache objectCache = null;if (!string.IsNullOrEmpty(documentId)){objectCache = GetCacheForDocument(documentId);}if (objectCache == null)objectCache = DefaultCache;return objectCache;}public ObjectCache GetCacheForDocument(Uri documentUri){if (documentUri == null)throw new ArgumentNullException(nameof(documentUri));// Get the document ID from the URI and call the other version of this functionif (!DocumentFactory.IsUploadDocumentUri(documentUri))throw new ArgumentException($"{documentUri.ToString()} is not a valid LEAD document URI", nameof(documentUri));string documentId = DocumentFactory.GetLeadCacheData(documentUri);return GetCacheForDocument(documentId);}public ObjectCache GetCacheForDocumentOrDefault(Uri documentUri){ObjectCache objectCache = null;if (documentUri != null){objectCache = GetCacheForDocument(documentUri);}if (objectCache == null)objectCache = DefaultCache;return objectCache;}public ObjectCache GetCacheForLoadFromUri(Uri uri, LoadDocumentOptions loadDocumentOptions){return _objectCache;}public ObjectCache GetCacheForBeginUpload(UploadDocumentOptions uploadDocumentOptions){return _objectCache;}public ObjectCache GetCacheForCreate(CreateDocumentOptions createDocumentOptions){return _objectCache;}}}

Update the InitializeS3BucketCache() method with your AWS Key and AWS Secret Key. For more information on setting Keys with the AWSSK3, consult the AWS documentation.

Note that this method of setting the credentials is only an example and you should use your credentials in a safe way that does not leave your access keys exposed.

private ObjectCache InitializeS3BucketCache(){// Called by InitializeService the first time the service is run// Initialize the global Cache objectstring cacheConfigFile = ServiceHelper.GetSettingValue(ServiceHelper.Key_Cache_ConfigFile);cacheConfigFile = ServiceHelper.GetAbsolutePath(cacheConfigFile);if (string.IsNullOrEmpty(cacheConfigFile))throw new InvalidOperationException($"The cache configuration file location in '{ServiceHelper.Key_Cache_ConfigFile}' in the configuration file is empty");ObjectCache objectCache = null;// Set the base directory of the cache (for resolving any relative paths) to this project's pathvar additional = new Dictionary<string, string>();additional.Add(ObjectCache.BASE_DIRECTORY_KEY, WebRootPath);try{//TODO Setup Connectionsstring awsAccessKeyId = "AWS_ACCESS_KEY"; //Update with Access Keystring awsSecretAccessKey = "AWS_SECRET_ACCESS_KEY"; //Update with Secret Access KeyRegionEndpoint region = RegionEndpoint.USEast1; //Update to match a region where your S3 buckets are accessible.AmazonS3Client client = new AmazonS3Client(awsAccessKeyId, awsSecretAccessKey, region);string bucketName = "leadtoolstestbucket";objectCache = new S3BucketCache(client, bucketName);}catch (Exception ex){throw new InvalidOperationException($"Cannot load cache configuration from '{cacheConfigFile}'", ex);}return objectCache;}

Set the Cache in the Document Service

In the Solution Explorer, open the ServiceHelper.cs file. Update the CreateCache function to use the S3BucketCacheManager.

public static void CreateCache(){// Called by InitializeService the first time the service is run// Initialize the global ICacheManager object//ICacheManager cacheManager = null;S3BucketCacheManager cacheManager = new S3BucketCacheManager(WebRootPath, null);// See if we have a CacheManager configuration file/*string cacheManagerConfigFile = GetSettingValue(Key_Cache_CacheManagerConfigFile);cacheManagerConfigFile = GetAbsolutePath(cacheManagerConfigFile);if (!string.IsNullOrEmpty(cacheManagerConfigFile)){using (var stream = File.OpenRead(cacheManagerConfigFile))cacheManager = CacheManagerFactory.CreateFromConfiguration(stream, WebRootPath);}else{// Try to create the default ICacheManager directly (backward compatibility)cacheManager = new DefaultCacheManager(WebRootPath, null);}*/if (cacheManager == null)throw new InvalidOperationException("Could not find a valid LEADTOOLS cache system configuration.");cacheManager.Initialize();_cacheManager = cacheManager;}

Run the Project

To run the project, press F5 or select Debug -> Start Debugging.

If the steps were followed correctly, the application should open two web browsers, one for the Document Service and one for the Document Viewer.

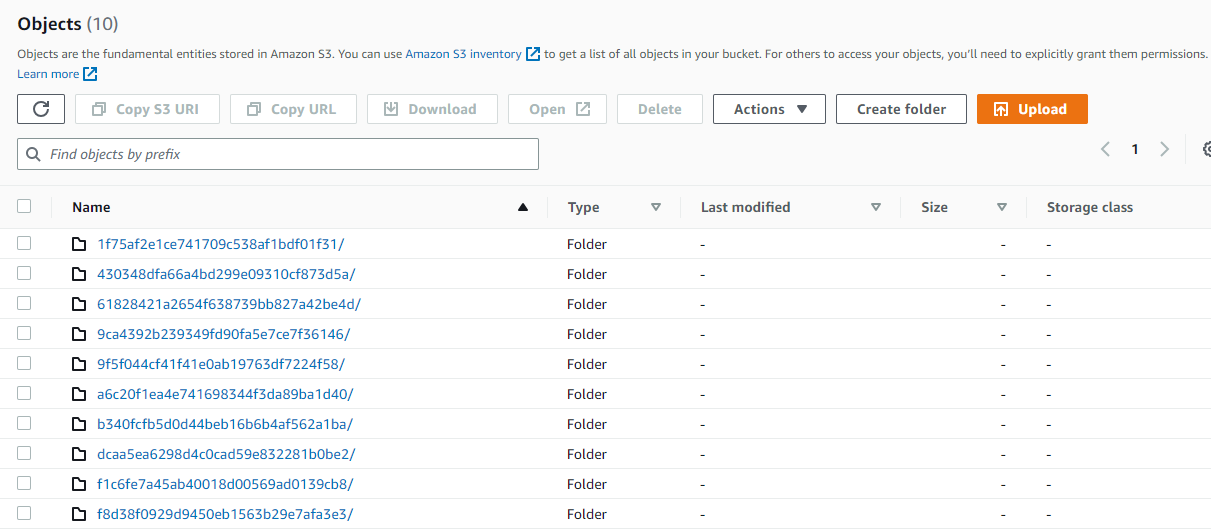

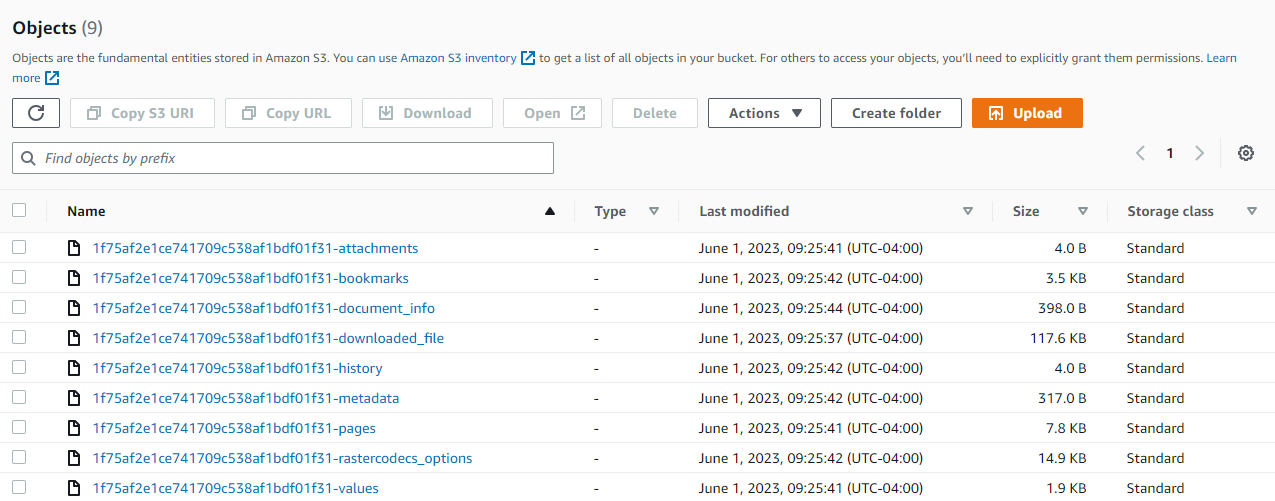

You can see the contents of the cache by using the AWS Portal, and looking at your S3 Bucket being used by the cache. Below are some examples of the contents you can expect to see in the S3 Bucket.

For your reference, you can download the S3BucketCache.cs and S3BucketCacheManager.cs files here

Wrap-up

This tutorial showed how to set up an AWS S3 Bucket Cache to use with the LEADTOOLS .NET Framework Document Service and HTML5/JS Document Viewer.