Extract Text from Image with OCR - React JS

This tutorial shows how to run OCR text recognition on an image in a React JS application using the LEADTOOLS SDK.

| Overview | |

|---|---|

| Summary | This tutorial covers how to run OCR on an image in a React JS application. |

| Completion Time | 30 minutes |

| Visual Studio Project | Download tutorial project (474 KB) |

| Platform | React JS Web Application |

| IDE | Visual Studio - Service & Visual Studio Code - Client |

| Development License | Download LEADTOOLS |

Required Knowledge

Get familiar with the basic steps of creating a project and loading an image inside an ImageViewer by reviewing the Add References and Set a License and Display Images in an Image Viewer tutorials, before working on the Extract Text from Image with OCR - React JS tutorial.

Make sure that Yarn is installed so that creating a React application can be done quickly via the command line. If yarn is not installed, it can be found on:

https://classic.yarnpkg.com/en/docs/install/#windows-stable

Create the Project and Add LEADTOOLS References

Start with a copy of the project created in the Display Images in an Image Viewer tutorial. If you do not have a copy of that tutorial project, follow the steps inside that tutorial to create it.

The references needed depend upon the purpose of the project. For this project, the following JS files are needed and located here: <INSTALL_DIR>\LEADTOOLS22\Bin\JS

Leadtools.jsLeadtools.Controls.jsLeadtools.Document.jsLeadtools.Annotations.Engine.js

Make sure to copy these files to the public\common folder and import them in the public\index.html file.

For more information on which files to include for your JavaScript application, see Files to be Included with your Application.

Set the License File

The License unlocks the features needed for the project. It must be set before any toolkit function is called. For details, including tutorials for different platforms, refer to Setting a Runtime License.

There are two types of runtime licenses:

- Evaluation license, obtained at the time the evaluation toolkit is downloaded. It allows the toolkit to be evaluated.

- Deployment license. If a Deployment license file and developer key are required, refer to Obtaining a License.

Import LEADTOOLS Dependencies

Open the index.html file in the public folder. Add the below necessary script tags inside the head to import LEADTOOLS dependencies.

<head><meta charset="utf-8" /><link rel="icon" href="%PUBLIC_URL%/favicon.ico" /><meta name="viewport" content="width=device-width, initial-scale=1" /><meta name="theme-color" content="#000000" /><meta name="description" content="Web site created using create-react-app" /><script src="https://code.jquery.com/jquery-3.4.1.min.js"integrity="sha256-CSXorXvZcTkaix6Yvo6HppcZGetbYMGWSFlBw8HfCJo=" crossorigin="anonymous"></script><!--Import LEADTOOLS dependencies--><script type="text/javascript" src="/common/Leadtools.js"></script><script type="text/javascript" src="/common/Leadtools.Controls.js"></script><script type="text/javascript" src="/common/Leadtools.Demos.js"></script><script type="text/javascript" src="/common/Leadtools.Document.js"></script><!--Import our script with our Leadtools Logic--><script src="/common/DocumentHelper.js"></script><script src="/common/app.js"></script><link rel="manifest" href="%PUBLIC_URL%/manifest.json" /><title>React App</title></head>

Create the DocumentHelper Class and Connect to Document Service

With the project created, the references added, the license set, and the ImageViewer initialized coding can begin.

Inside the common folder in the public folder, create a new JS file named DocumentHelper.js. Add a new class to the new JS file named DocumentHelper. Add the following code inside the DocumentHelper class.

class DocumentHelper {// Startup functionstatic connectToDocumentService = () => {let serviceStatus;document.addEventListener("DOMContentLoaded", function () {serviceStatus = document.getElementById("serviceStatus");let output = document.getElementById("output");// To communicate with the DocumentsService, it must be running!// Change these parameters to match the path to the service.lt.Document.DocumentFactory.serviceHost = "http://localhost:40000";lt.Document.DocumentFactory.servicePath = "";lt.Document.DocumentFactory.serviceApiPath = "api";serviceStatus.innerHTML = "Connecting to service " + lt.Document.DocumentFactory.serviceUri;lt.Document.DocumentFactory.verifyService().done(function (serviceData) {let setStatus = function(){serviceStatus.innerHTML = ("\n" + "\u2022" + " Service connection verified!");}setTimeout(setStatus, 1500);}).fail(showServiceError).fail(function () {serviceStatus.innerHTML = "Service not properly connected.";});});}static showServiceError = (jqXHR, statusText, errorThrown) => {alert("Error returned from service. See the console for details.");const serviceError = lt.Document.ServiceError.parseError(jqXHR, statusText, errorThrown);console.error(serviceError);}static log = (message, data) => {const outputElement = document.getElementById("output");if (outputElement) {const time = (new Date()).toLocaleTimeString();const textElement = document.createElement("p");textElement.innerHTML = "\u2023" + " [" + time + "]: " + message;textElement.style = "text-align: left;";outputElement.appendChild(textElement, outputElement.firstChild);}if (!data)console.log(message);elseconsole.log(message, data);}}

Open the App.js file in the src folder and replace the HTML with the following code:

import React from 'react';import './App.css';function App() {return (<div className="App"><header className="App-header"><p id="serviceStatus"></p><h3>React OCR Example </h3><div id="btnMenu"><input type="file" id="file-input" accept=".jpg,.jpeg,.png"></input><button id="addToViewer">Add Image To Viewer</button><button id="ocrButton">Ocr</button></div><div id="imageViewerDiv"></div><h6 id="output"></h6></header></div>);}export default App;

Improve the Visuals of the Project

Navigate to App.css inside the src folder which creates our HTML elements. Add the following code to improve the visuals of the application.

.App {text-align: center;}@media (prefers-reduced-motion: no-preference) {.App-logo {animation: App-logo-spin infinite 20s linear;}}.App-header {background-color: #282c34;min-height: 100vh;display: flex;flex-direction: column;align-items: center;justify-content: center;font-size: calc(10px + 2vmin);color: white;}.App-link {color: #61dafb;}@keyframes App-logo-spin {from {transform: rotate(0deg);}to {transform: rotate(360deg);}}#btnMenu{background-color: #555555;display: flex;flex-direction: column;width: 350px;padding: 10px;}#output{background-color: #888888;width: 70%;padding-left: 15px;padding-right: 15px;}#imageViewerDiv{background-color: rgba(170, 170, 170, 1);border-radius: 1%;margin-top: 5px;height: 400px;width: 400px;}

Add the OCR Text Code

Open app.js, and add the following code prior to the end of the window.onload() function.

//Create an Image Viewerlet imageViewerDiv = document.getElementById("imageViewerDiv");const createOptions = new lt.Controls.ImageViewerCreateOptions(imageViewerDiv);this.imageViewer = new lt.Controls.ImageViewer(createOptions);this.imageViewer.zoom(lt.Controls.ControlSizeMode.fit, 1, imageViewer.defaultZoomOrigin);this.imageViewer.viewVerticalAlignment = lt.Controls.ControlAlignment.center;this.imageViewer.viewHorizontalAlignment = lt.Controls.ControlAlignment.center;this.imageViewer.autoCreateCanvas = true;this.imageViewer.imageUrl = "https://demo.leadtools.com/images/jpeg/cannon.jpg";//Create variables Grabbing the HTML elementslet fileList = document.getElementById("file-input");let btn = document.getElementById("addToViewer");btn.onclick = (function () {//create our iterator// let i = 0;//initially set our target to the first child of the uploaded files, then iterate it so//subsequent images can be loaded in.let files = fileList.files[0];let newUrl = window.URL.createObjectURL(files);imageViewer.imageUrl = newUrl;// i++;});//Create the Barcode Buttonlet ocrBtn = document.getElementById("ocrButton");//Create the Click EventocrBtn.onclick = async function () {//Before running Barcode Recognition, we check if an image has been uploaded// let i = 0;if (!fileList.files[0]) {alert("No Image Chosen for Barcode Recognition. Select an Image via Choose File, before getting the Barcode");}else {try {let leadDoc = await lt.Document.DocumentFactory.loadFromFile(fileList.files[0], new lt.Document.LoadDocumentOptions())console.log("Document loaded and has cache id: " + leadDoc.documentId);let leadRectD = lt.LeadRectD.empty;DocumentHelper.log("OCR Starting...");//Since running OCR on an image, and having the text appended to the page elongates the document, we scroll to the output.let myelem = document.getElementById("output")let scrollOptions = {left: myelem.offsetParent.offsetWidth,top: myelem.offsetParent.offsetHeight,behavior: 'smooth'}window.scrollTo(scrollOptions);leadDoc.pages.item(0).getText(leadRectD)let CharacterData = await leadDoc.pages.item(0).getText(leadRectD);console.log(CharacterData);DocumentHelper.log("OCR'd Text : " + CharacterData.text);window.scrollTo(scrollOptions);// i++;} catch (error){console.error(error);}}};

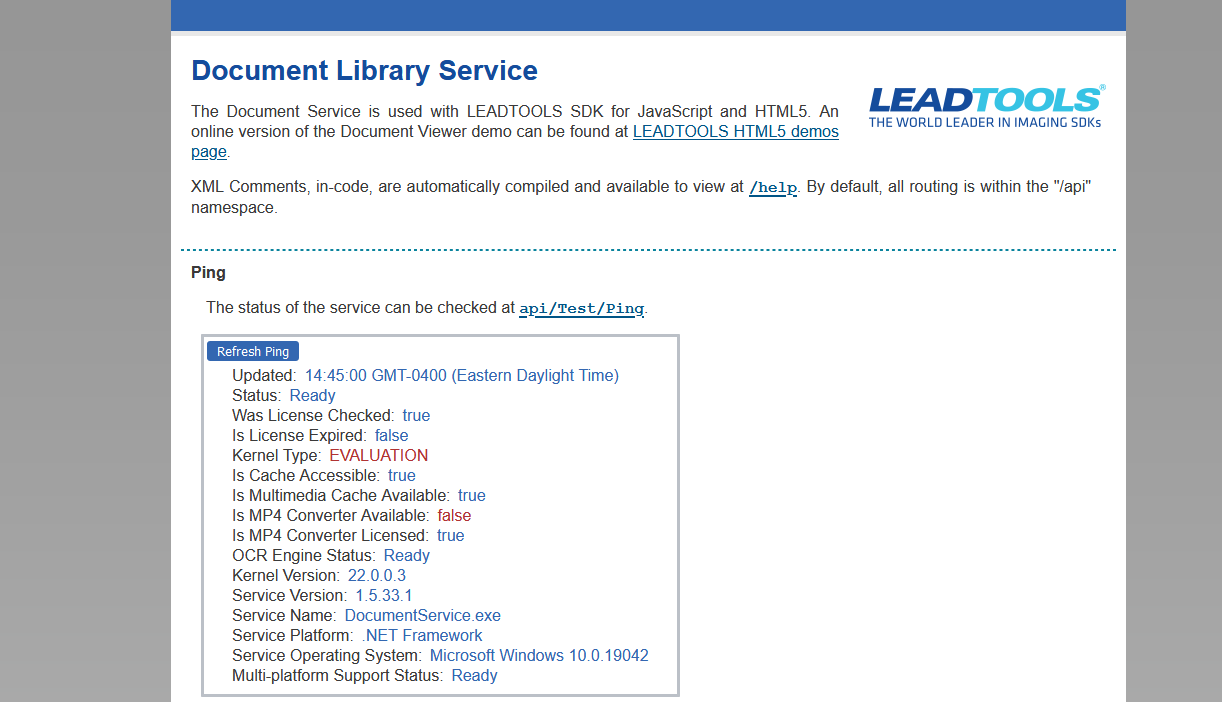

Run the Document Service

In order to run this application successfully, the LEADTOOLS .NET Framework Document Service is required. The LEADTOOLS .NET Framework Document Service project is located at <INSTALL_DIR>\LEADTOOLS22\Examples\Document\JS\DocumentServiceDotNet\fx.

Note

Only the .NET Framework Document Service is able to uploadDocumentBlob, so this will not function with the .NET Core Document Service.

Open the DocumentService.csproj and run the project using IIS Express. After running the Document Service project in Visual Studio, the webpage will show that the service is listening. The Client Side will be able to communicate with the Document Service, allowing the Image Data processing, and returning the extracted text from the image.

Run the Project

To run the OCR project, open a new terminal window and cd into the root of the project. From there run the command yarn start. If you do not have the node modules included with the project, be sure to also run the command npm install, before running the project.

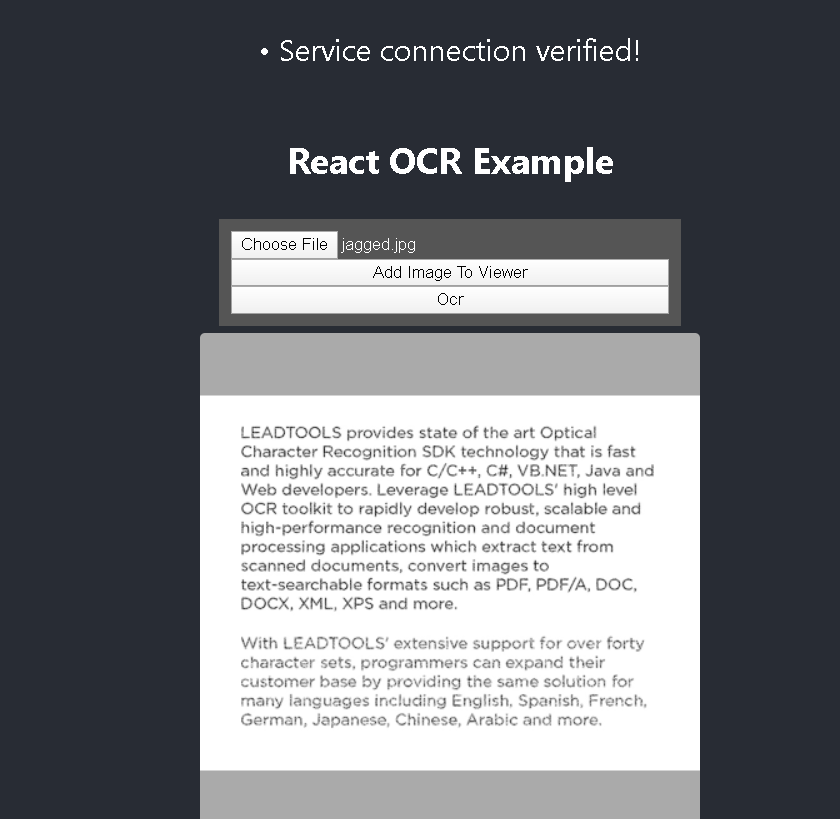

To test the project, follow the steps below:

- A default image will appear upon project start. To load the image you would like to OCR, select Choose File, which will bring up a file dialog.

-

Once you select the image you wish to OCR press Add Image To Viewer.

-

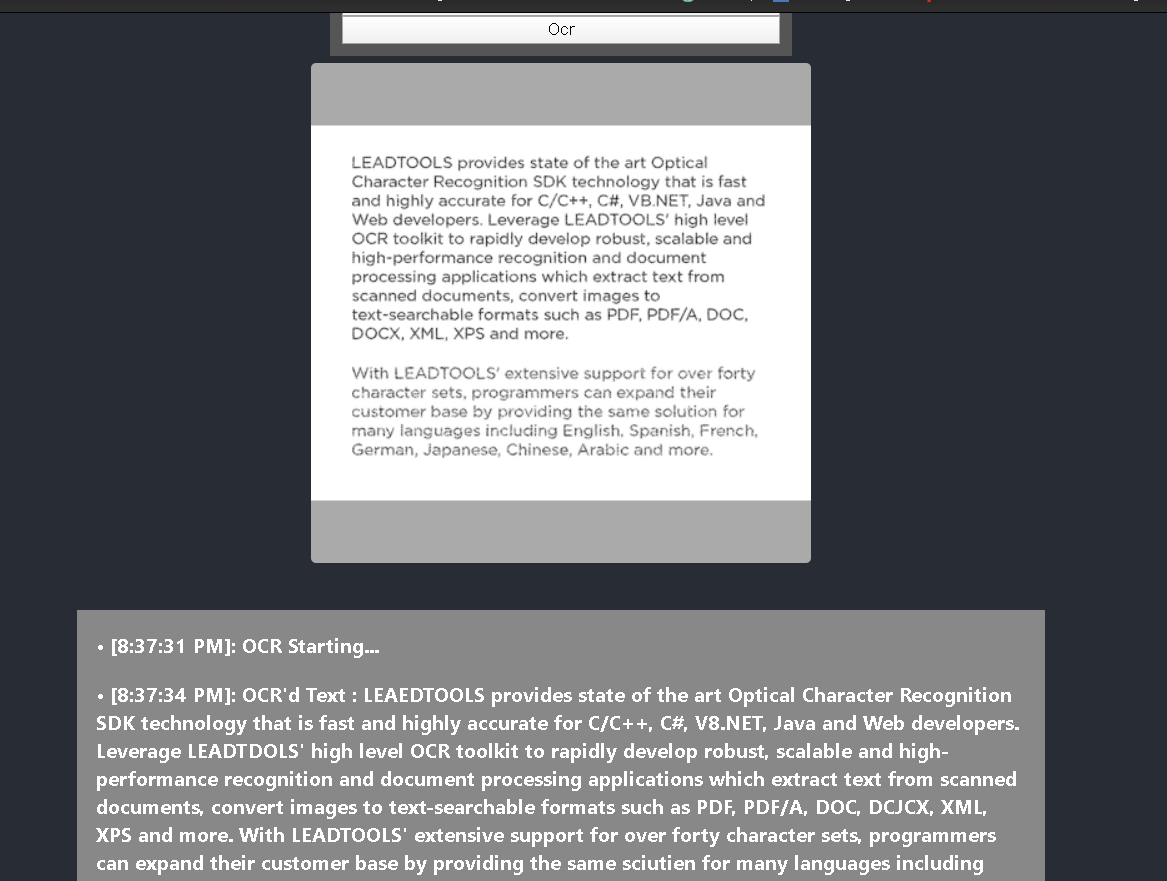

To OCR the image and print the results below the

ImageViewer, press Ocr.

Wrap-Up

This tutorial showed how to recognize text from an image in a ReactJS application.